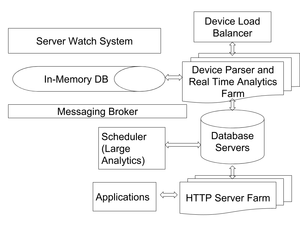

In this blog, we present a scalable IoT platform implemented with the Javascript based MEAN stack. Scalability, security and fault tolerance were the key features we were looking for, while building this platform to support multiple GPS and OBD based devices.

The entire stack is easily portable to any UNIX machine. An engineer can install the entire stack on any cloud service (AWS, Azure) or even on UNIX laptop.

What is MEAN?

MEAN is the abbreviation for Mongo, Express, Angular and Node. All components of the MEAN stack are Javascript based, and therefore the MEAN is full stack Javascript from client side till database. For us, it meant that the tech team had to master only one language (that did not turn out to be true).

Why MEAN is suitable for scalable IoT platforms

Our devices are small, cheap and constantly send small amounts of information over a TCP socket. An ideal server should be able to handle several concurrent connections and scalable. Node.js fit the requirements very well. Node is inherently event driven, so we do not have to do any thread or connection management and writing TCP servers with Node.js is quite simple. We support several different devices from different vendors. These devices have different levels of complexity and communicate in very different protocols. We save data from all these devices and make all the data available on the same APIs. Schema-less MongoDB gave us the flexibility to add multiple devices, without having to worry about how to manage ‘columns’. Databases with MongoDB can also be made scalable and fault tolerant with sharding and replication.

I will not delve too much in details about each of constituents of MEAN. The entire stack is open source and there is a lot of information available on the web.

Device Load Balancer

All devices connect to the device load balancer. The device load balancer distributes the TCP connections to a cluster of device connectors. This load balancer is implemented with HAProxy. It supports sticky connections, i.e. the device talks to same server in the cluster in both directions. Stickiness was a requirement for some of devices.

Device Parser

The farm of device parsers consists of several servers and each parser talks to different devices simultaneously. The device parser is a TCP server implemented in Node.js. These servers talk to the devices, translate the data, and run real time analytics on demand. These machines are small lightweight machines. To ensure high availability, we do the following:

- Enable large swap memories

- The application runs continuously using forever. Forever restarts the application in case it crashes.

- Improve reuse of TCP connections

- Build redundancy by running a extra servers

MQTT Broker

MQTT is real time, light weight, publish/subscribe based messaging service. The MQTT broker enables real time communication between devices, servers, client web and mobile applications. We implemented the MQTT broker in Node.js using Mosca. MQTT is light weight, easy to implement, consumes less power, and bandwidth. MQTT clients can be implemented in Android, IoS, Node and JavaScript.

In-Memory DB

Redis is used as an In-Memory datastore that gets used by multiple servers. The device parsers, real time analytics and scheduled analytics servers use Redis as a fast memory cache. Redis is a No-SQL key-value datastore and is much faster than conventional databases. Our benchmarking tests showed that Redis was 30 times faster than the conventional database.

Schedulers

The schedulers run heavy analytics, web scraping, machine learning and server management tasks regularly. The frequency of these operations vary based on requirements. The scheduler is written in Node.js. Most of the analytics and management scripts are written in Python.

Server Watch Sentinels

Our IoT platform has several components and ensuring that all applications are up was a key challenge. We wrote a Server Watch Sentinel to ensure that all the load balancers, HTTP servers, device parsers, applications, brokers, config servers, database shards, mongos clients are up and active. It is a Node.js program and is hosted on multiple servers in the VPC and they run every hour. We run the sentinels on multiple servers for two reasons:

- Redundancy – If the machine hosting the sentinel goes down, we have no way of knowing it immediately. When we run the sentinel on multiple server, if one server goes down, the other sentinel identifies and escalates.

- Frequent checking – The sentinels run every hour and the triggering minute is different on different servers. With this approach, we manage to check the application a few times every hour.

In case any application or server crashes and goes down, the sentinel informs the devops team by email. The devops team reviews the logs, and then does an RCCA to ensure these issues do not happen again.

HTTP load balancer

The HTTP API servers sit behind a load balancer. Yatis uses an AWS Elastic Load Balancer (ELB) with an Auto Scaling Group. This load balancer can be standard offering from your cloud provider, or a custom load balancer made with NGINX or HAProxy.

API Server

Most of our customers use data from Yatis over APIs to power their in house applications. We chose Express.js, a Node.js based web application framework for our API server. To secure access to the APIs, we use JWT tokens and Passport.js. We use Swagger.io for documenting the APIs for customers.

Applications

We used Angular.js to create all web applications. Angular makes it easy to develop, maintain and reuse complex dynamic front-end applications. D3 powers all the charts and graphs that bring life to the applications. The web applications also have an MQTT client embedded for live tracking.

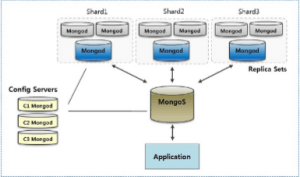

Mongo Database

We use MongoDB to build a scalable, fault tolerant, and always available database. The Yatis database is both vertically and horizontally scalable using shards and replicated sets. Our database consists of the following:

Mongos Routers

Mongos instances route all read and write operations from an application to shards. We embedded a mongos instance in all application servers in the IoT platform, including device connectors, API servers and schedulers.

Mongo Config Servers

The Mongo config server setup consists of a 3-member replicaset. The config servers store metadata, or information about the organization and state of the data in the sharded cluster.

Mongo Shards

Mongo shards contain all the data on the IoT platform. Each shard is a 3-member replicaset, i.e. 2 level redundancy. As the amount of data increases, we add more shards to the platform, while keeping the platform active. It also allows for seamless replacement of any of mongo shards or config servers, while keeping the application unaffected.

Some more details about this stack can be found on Stackshare.